September 2014 Issue

Topics

Visions of reality: Insights into information processing by the brain

"I am intrigued by the mechanisms underlying motor control and visual perception by our brain," says Shunji Satoh, an associate professor at the Department of Human Media Systems at the University of Electro-Communications. "We develop computational models to analyze experimental observations."

In motor control, Satoh and his group are developing computational theories based on control theory, robotics, and learning theory to investigate puzzles such as how humans are able to move and pick up a drink, a process that the brain is able to compute and execute in less than 200 ms.

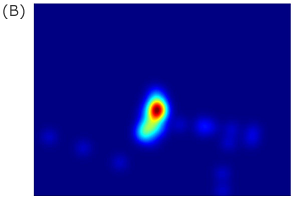

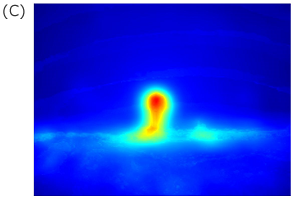

"We are also working on visual perception," says Satoh. "For example, we are developing computer algorithms and neural models to clarify well known experimental findings such as the brain filling in the effects of the blind spot in visual fields."

Recently, one of the projects being conducted by Satoh and colleagues was acknowledged as the world's number one in the 'MIT Saliency Benchmark' (8 August 2014).

"I am confident that our research will find applications in assisting people with poor vision," says Satoh. "Other applications include driver assistance and robotics."

Relevant publications

- Xuehua HAN, Shunji SATOH, Daiki NAKAMURA. Kazuki URABE, "Unifying computational models for visual attention yields better scores than state-of-the-art models," Advances in Neuroinformatics, 2014 (accepted)

- Shunji SATOH, "Computational identity between digital image inpainting and filling-in process at the blind spot," Neural Computing and Applications, 21(4), pp. 613-621 (2012)