March 2021 Issue

Research Highlights

Machine learning

Improving the counting capability of Internet-of-Things systems

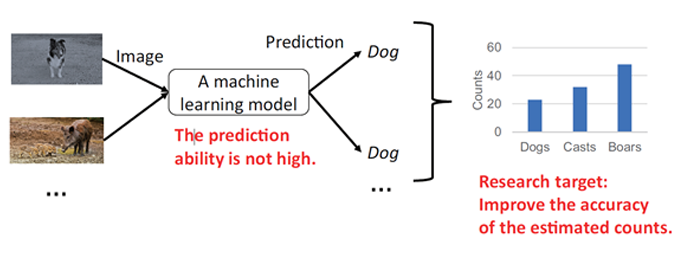

Devices being able to automatically recognize objects, animals or people are becoming more and more widespread. Such automatic recognition usually involves sensors or cameras that are part of an Internet of Things (IoT) system, which connects to a neural network. Examining and classifying an object is then done based on machine-learning techniques.

Quite often, the required information behind automatic recognitions is a count — for example, the number of deer caught on a camera trap. Normally, the accuracy of such a count would depend on the accuracy of the machine learning model’s recognition ability. For the camera trap example: if deer are recognized with low accuracy, the total deer count would also be of low accuracy. But now, Yuichi Sei and Akihiko Ohsuga from the University of Electro-Communications, Tokyo, Japan, have shown that it is possible to get good total count accuracy even with low recognition accuracy.

The researchers’ trick lies in using a so-called confusion matrix, a table of percentages expressing how well the IoT system can distinguish recorded objects. For example, if the objects are deer, cats and dogs, the matrix contains information on how many times a deer is correctly recognized as such, or falsely as a cat, or a dog, and so on for all possible cases.

The confusion matrix comes into play after the IoT has been ‘trained’. The latter means that from a large set of example images, of which the IoT is ‘told’ what is on it (for example a deer), the IoT’s machine-learning model develops a procedure for deciding what is on a previously unseen image. Applying such a trained IoT is referred to as a baseline.

Sei and Ohsuga developed a way to compensate, as effectively as possible, estimated overall counts obtained in a baseline run for errors in individual object recognitions. They applied Bayes’ theorem, a mathematical formula giving the probability of an outcome taking into consideration conditional probabilities that are relevant to the outcome in question. In the case of IoT object recognition, these conditional probabilities are related to the numbers in the confusion matrix.

The scientists applied their method using images of objects from a few image databases (for training, generating the confusion matrix, and testing) in six tests. On average, they found that the estimation errors were reduced by 64 % compared to the baseline runs.

The work of Sei and Ohsuga is innovative. Whereas previous studies have aimed to improve the classification accuracy for each individual observation, the present study aimed to improve the accuracy of total counts instead.

Objective of the research.