February 2021 Issue

Research Highlights

Innovative automated control systems: Control-theoretic approach for fast online reinforcement learning

Reinforcement Learning (RL) is an effective way of designing model-free linear quadratic regulators (LQRs) for linear time-invariant networks with unknown state-space models. RL has wide ranging applications including industrial automation, self-driving automobiles, power grid systems, and even forecasting stock prices for financial markets.

However, conventional RL can result in unacceptably long learning times when network sizes are large. This can pose a serious challenge for real-time decision-making.

Tomonori Sadamoto at the University of Electro-Communications, Aranya Chakrabortty at North Carolina State University, USA, and Jun-ichi Imura at the Tokyo Institute of Technology have proposed a fast RL algorithm that enables online control of large-scale network systems.

Their approach is to construct a compressed state vector by projecting the measured state through a projection matrix. This matrix is constructed from online measurements of the states in a way that it captures the dominant controllable subspace of the open-loop network model. Next, a RL-controller is learned using the reduced-dimensional state instead of the original state such that the resultant cost is close to the optimal LQR cost.

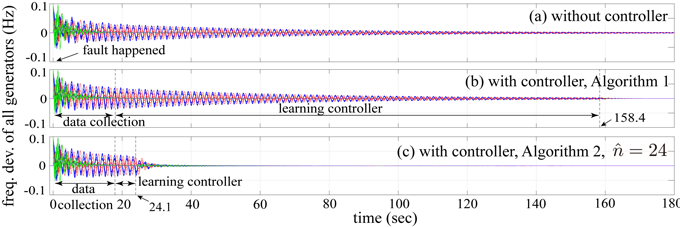

The lower dimensionality of this approach enables a drastic reduction in the computational complexity for learning. Moreover, stability and optimality of the control performance are theoretically evaluated using robust control theory by treating the dimensionality-reduction error as an uncertainty. Numerical simulations through a 100-dimensional large-scale power grid model showed that the learning speed improved by almost 23 times while maintaining control performance.

The main contribution of the paper is to show how two individually well-known concepts in dynamical system theory and machine learning, namely, model reduction and reinforcement learning, can be combined to construct a highly efficient real-time control design for extreme-scale networks.